The Evolution of Data Pipeline Construction

In today’s data-driven landscape, organizations are generating unprecedented volumes of information across multiple touchpoints. The traditional approach to Extract, Transform, Load (ETL) processes has undergone a remarkable transformation, with artificial intelligence emerging as the catalyst for this revolutionary change. AI-powered ETL tools represent the next frontier in data engineering, offering sophisticated automation capabilities that were unimaginable just a decade ago.

The complexity of modern data ecosystems demands intelligent solutions that can adapt, learn, and optimize themselves without constant human intervention. Traditional ETL tools, while functional, often require extensive manual configuration and struggle with the dynamic nature of contemporary data sources. AI-powered alternatives bring machine learning algorithms directly into the pipeline construction process, creating systems that become more efficient over time.

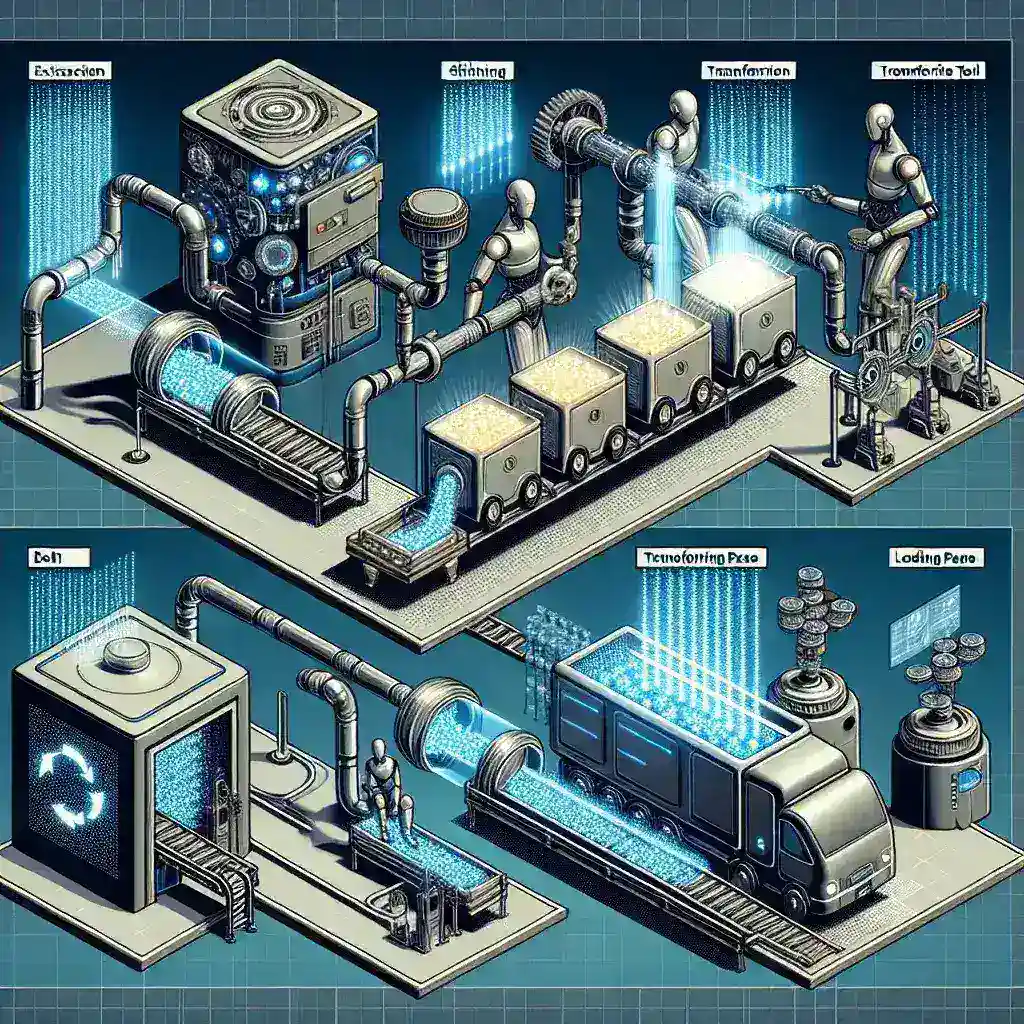

Understanding AI-Enhanced ETL Architecture

The fundamental architecture of AI-powered ETL tools differs significantly from their conventional counterparts. These systems incorporate machine learning algorithms at every stage of the data processing workflow, from initial data discovery to final load optimization. The extraction phase benefits from intelligent source detection and automatic schema recognition, while transformation processes leverage natural language processing and pattern recognition to understand data relationships.

Modern AI-powered platforms utilize neural networks to identify data quality issues before they propagate through the pipeline. This proactive approach to data validation represents a paradigm shift from reactive error handling to predictive quality assurance. The load phase incorporates intelligent scheduling algorithms that optimize resource utilization based on historical patterns and real-time system performance metrics.

Key Components of Intelligent Data Pipelines

The core components of AI-enhanced ETL systems include automated data profiling engines, intelligent mapping generators, and adaptive transformation modules. Automated data profiling uses statistical analysis and machine learning to understand data characteristics, identifying patterns, anomalies, and relationships without manual intervention. This capability dramatically reduces the time required for initial pipeline setup and ongoing maintenance.

Intelligent mapping generators employ semantic analysis to automatically suggest field mappings between source and target systems. These tools analyze data content, field names, and historical mapping patterns to propose optimal transformation logic. The accuracy of these suggestions improves over time as the system learns from user feedback and successful pipeline executions.

The Business Impact of AI-Powered Data Pipelines

Organizations implementing AI-powered ETL tools report significant improvements in operational efficiency and data quality. A recent industry survey revealed that companies using intelligent data pipeline solutions experienced an average 60% reduction in pipeline development time and a 45% decrease in data quality issues. These improvements translate directly into faster time-to-insight and more reliable business intelligence.

The financial implications extend beyond operational savings. Intelligent data pipelines enable organizations to process larger volumes of data with fewer resources, reducing infrastructure costs while improving scalability. The self-optimizing nature of these systems means that performance improvements compound over time, creating increasingly valuable data assets.

Real-World Implementation Success Stories

Consider the case of a multinational retail corporation that implemented AI-powered ETL tools to consolidate data from over 500 stores across 20 countries. The traditional approach would have required months of manual configuration and ongoing maintenance. Instead, the AI-powered solution automatically detected data patterns, established optimal transformation rules, and created a unified data model in just three weeks.

The system’s machine learning capabilities identified seasonal patterns in sales data that human analysts had missed, leading to improved inventory management and a 12% increase in overall profitability. This example illustrates how AI-powered ETL tools can deliver insights that extend far beyond simple data movement and transformation.

Technical Advantages and Capabilities

The technical sophistication of modern AI-powered ETL platforms encompasses several breakthrough capabilities. Automatic schema evolution allows pipelines to adapt to changes in source data structures without manual intervention. When new fields are added or existing fields are modified, the system automatically adjusts transformation logic and target schemas to accommodate these changes.

Advanced error handling mechanisms use machine learning to predict and prevent pipeline failures before they occur. These systems analyze historical failure patterns, system resource utilization, and data quality metrics to identify potential issues proactively. When problems do arise, intelligent recovery mechanisms can automatically implement corrective actions or route data through alternative processing paths.

Integration with Modern Data Ecosystems

AI-powered ETL tools excel in cloud-native environments, leveraging distributed computing resources to handle massive data volumes efficiently. These platforms integrate seamlessly with popular cloud services, automatically scaling compute resources based on workload demands. The integration extends to modern data storage solutions, including data lakes, cloud warehouses, and real-time streaming platforms.

The compatibility with emerging technologies such as containerization and microservices architecture makes these tools ideal for organizations pursuing digital transformation initiatives. API-first design principles ensure that AI-powered ETL capabilities can be embedded within existing data workflows and business applications.

Best Practices for Implementation

Successful implementation of AI-powered ETL tools requires careful planning and adherence to proven best practices. The initial phase should focus on data discovery and quality assessment, allowing the AI systems to learn the characteristics of your specific data environment. This learning period is crucial for optimal performance and should not be rushed.

Data governance remains critical even with intelligent automation. Organizations must establish clear policies for data access, quality standards, and compliance requirements. AI-powered tools can enforce these policies automatically, but they must be properly configured with organizational requirements and regulatory constraints.

Change Management and Team Preparation

The transition to AI-powered ETL tools requires significant change management efforts. Data engineering teams need training on new concepts such as machine learning pipeline optimization and automated quality monitoring. However, this investment in education pays dividends through improved productivity and reduced manual workload.

Collaboration between data engineers, data scientists, and business stakeholders becomes more important with AI-powered tools. These platforms can surface insights and patterns that require domain expertise to interpret correctly. Establishing cross-functional teams ensures that technical capabilities align with business objectives.

Overcoming Common Implementation Challenges

Organizations frequently encounter specific challenges when implementing AI-powered ETL solutions. Data quality issues can initially confuse machine learning algorithms, leading to suboptimal automation suggestions. The solution involves implementing robust data cleansing processes before AI training and maintaining high data quality standards throughout the organization.

Legacy system integration presents another common challenge. AI-powered ETL platforms often require modern APIs and data formats that older systems may not support. Gradual migration strategies and hybrid architectures can bridge this gap while organizations modernize their technology infrastructure.

Security and Compliance Considerations

The intelligent nature of AI-powered ETL tools introduces new security considerations. Machine learning models require access to potentially sensitive data for training and optimization. Organizations must implement robust access controls and data masking techniques to protect confidential information while enabling AI capabilities.

Compliance with data protection regulations becomes more complex with AI-powered tools. These systems must be configured to respect data residency requirements, retention policies, and individual privacy rights. Fortunately, many modern platforms include built-in compliance features that automatically enforce regulatory requirements.

Future Trends and Innovations

The evolution of AI-powered ETL tools continues at a rapid pace, with emerging trends pointing toward even more sophisticated capabilities. Natural language interfaces are beginning to allow business users to create and modify data pipelines using conversational commands. This democratization of data pipeline construction could fundamentally change how organizations approach data integration.

Quantum computing integration represents a longer-term opportunity for exponential performance improvements in data processing tasks. While still in early stages, quantum-enhanced ETL tools could solve complex optimization problems that are currently computationally prohibitive.

The Role of Edge Computing

Edge computing integration is becoming increasingly important as organizations seek to process data closer to its source. AI-powered ETL tools are evolving to support distributed processing architectures that can handle data transformation at edge locations while maintaining centralized orchestration and monitoring capabilities.

This distributed approach reduces latency and bandwidth requirements while enabling real-time data processing capabilities. Edge-enabled data pipelines are particularly valuable for IoT applications and geographically distributed organizations.

Measuring Success and ROI

Establishing clear metrics for success is essential when implementing AI-powered ETL tools. Traditional measures such as processing speed and error rates remain important, but organizations should also track AI-specific metrics such as automation accuracy and learning curve progression. These advanced metrics provide insights into how well the AI components are performing and where additional optimization may be needed.

Return on investment calculations should consider both direct cost savings and indirect benefits such as improved decision-making speed and enhanced data quality. The compounding nature of AI improvements means that ROI often increases over time as systems become more intelligent and efficient.

Conclusion: Embracing the AI-Powered Future

The transformation of data pipeline construction through AI-powered ETL tools represents more than a technological upgrade—it’s a fundamental shift toward intelligent, self-optimizing data infrastructure. Organizations that embrace these capabilities today position themselves for competitive advantages that will compound over time.

The journey toward AI-powered data pipelines requires investment in technology, training, and change management, but the benefits far outweigh the costs. As data volumes continue to grow and business requirements become more complex, intelligent automation becomes not just advantageous but essential for maintaining competitive data capabilities.

Success in this new paradigm requires a holistic approach that combines cutting-edge technology with sound data governance practices and skilled teams. Organizations that master this combination will find themselves well-positioned to extract maximum value from their data assets while building scalable, future-ready data infrastructure that adapts and improves autonomously.

Leave a Reply